Pandas Read Parquet File

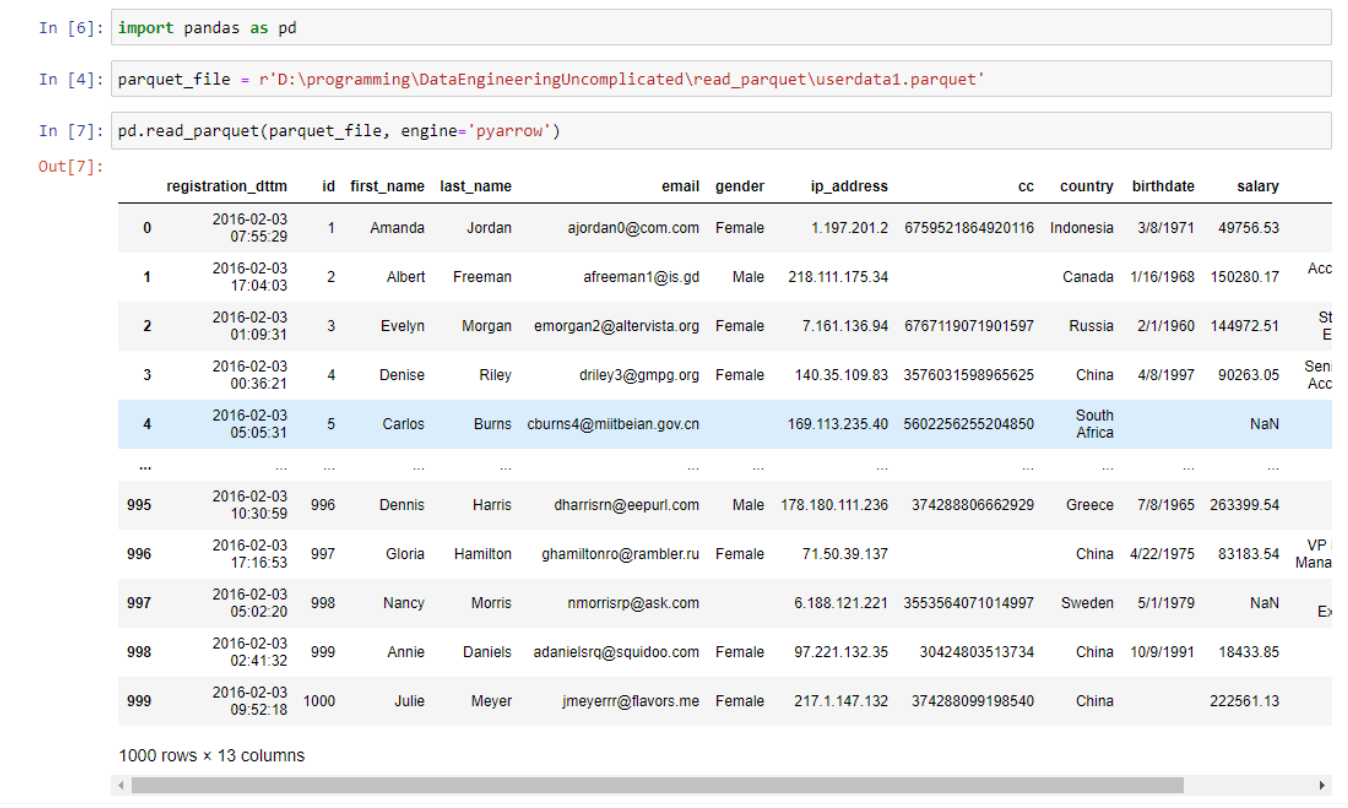

Pandas Read Parquet File - See the user guide for more details. Web pandas.read_parquet(path, engine='auto', columns=none, storage_options=none, use_nullable_dtypes=_nodefault.no_default, dtype_backend=_nodefault.no_default, filesystem=none, filters=none, **kwargs) [source] #. Web the read_parquet method is used to load a parquet file to a data frame. 12 hi you could use pandas and read parquet from stream. It could be the fastest way especially for. The file path to the parquet file. # read the parquet file as dataframe. Web load a parquet object from the file path, returning a dataframe. Pandas.read_parquet(path, engine='auto', columns=none, storage_options=none, use_nullable_dtypes=false, **kwargs) parameter path: Web geopandas.read_parquet(path, columns=none, storage_options=none, **kwargs)[source] #.

Web reading parquet to pandas filenotfounderror ask question asked 1 year, 2 months ago modified 1 year, 2 months ago viewed 2k times 2 i have code as below and it runs fine. I have a python script that: Df = pd.read_parquet('path/to/parquet/file', skiprows=100, nrows=500) by default, pandas reads all the columns in the parquet file. Web 1.install package pin install pandas pyarrow. To get and locally cache the data files, the following simple code can be run: Parameters pathstr, path object, file. Load a parquet object from the file. Web 5 i am brand new to pandas and the parquet file type. Polars was one of the fastest tools for converting data, and duckdb had low memory usage. It colud be very helpful for small data set, sprak session is not required here.

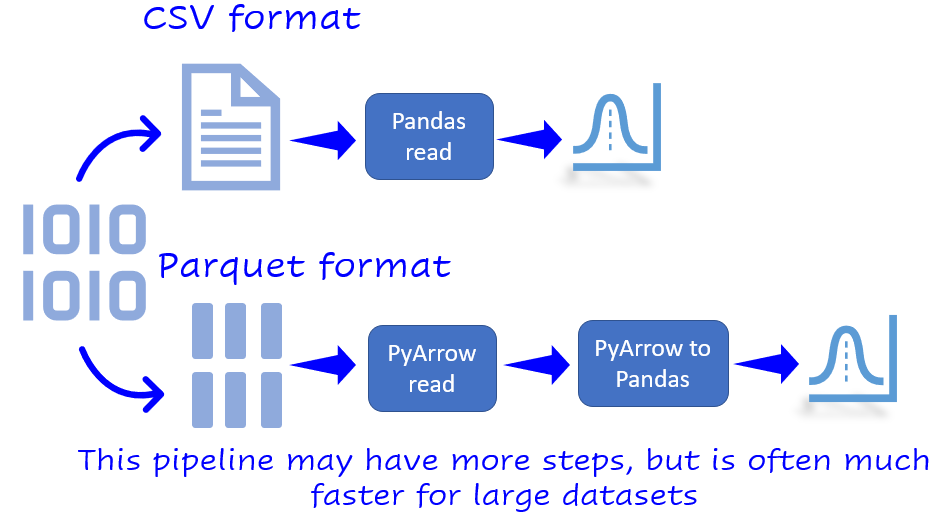

Using pandas’ read_parquet() function and using pyarrow’s parquetdataset class. It's an embedded rdbms similar to sqlite but with olap in mind. Web this is what will be used in the examples. Web this function writes the dataframe as a parquet file. Web reading the file with an alternative utility, such as the pyarrow.parquet.parquetdataset, and then convert that to pandas (i did not test this code). Df = pd.read_parquet('path/to/parquet/file', skiprows=100, nrows=500) by default, pandas reads all the columns in the parquet file. Parameters pathstr, path object, file. # read the parquet file as dataframe. See the user guide for more details. None index column of table in spark.

Add filters parameter to pandas.read_parquet() to enable PyArrow

Web 5 i am brand new to pandas and the parquet file type. Web the read_parquet method is used to load a parquet file to a data frame. Web reading parquet to pandas filenotfounderror ask question asked 1 year, 2 months ago modified 1 year, 2 months ago viewed 2k times 2 i have code as below and it runs.

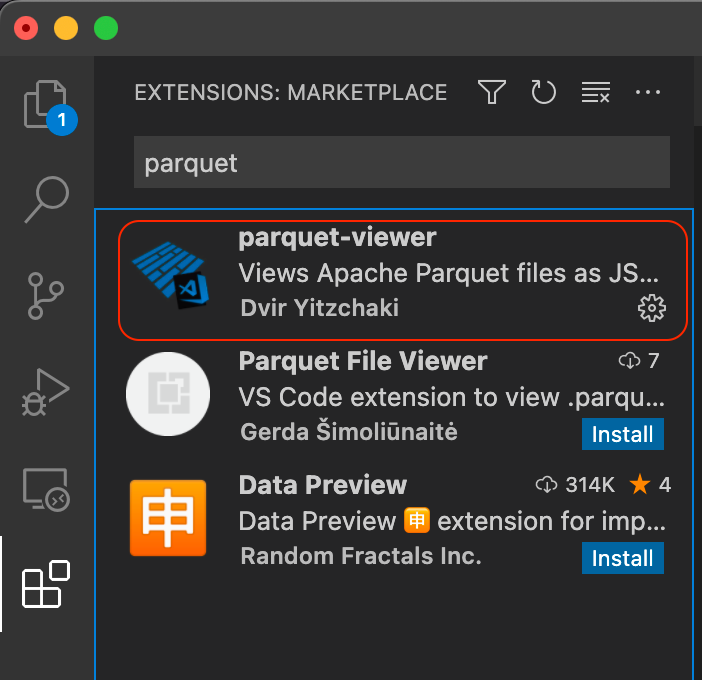

How to read (view) Parquet file ? SuperOutlier

It could be the fastest way especially for. Parameters path str, path object or file. It's an embedded rdbms similar to sqlite but with olap in mind. See the user guide for more details. You can use duckdb for this.

Python Dictionary Everything You Need to Know

It could be the fastest way especially for. Web 5 i am brand new to pandas and the parquet file type. Web load a parquet object from the file path, returning a dataframe. Load a parquet object from the file. Df = pd.read_parquet('path/to/parquet/file', skiprows=100, nrows=500) by default, pandas reads all the columns in the parquet file.

[Solved] Python save pandas data frame to parquet file 9to5Answer

Web reading the file with an alternative utility, such as the pyarrow.parquet.parquetdataset, and then convert that to pandas (i did not test this code). Syntax here’s the syntax for this: # get the date data file. You can use duckdb for this. Refer to what is pandas in python to learn more about pandas.

Pandas Read Parquet File into DataFrame? Let's Explain

We also provided several examples of how to read and filter partitioned parquet files. Web in this test, duckdb, polars, and pandas (using chunks) were able to convert csv files to parquet. See the user guide for more details. # import the pandas library as pd. Parameters path str, path object or file.

Why you should use Parquet files with Pandas by Tirthajyoti Sarkar

Parameters path str, path object or file. Polars was one of the fastest tools for converting data, and duckdb had low memory usage. Load a parquet object from the file. Web geopandas.read_parquet(path, columns=none, storage_options=none, **kwargs)[source] #. Web pandas.read_parquet¶ pandas.read_parquet (path, engine = 'auto', columns = none, ** kwargs) [source] ¶ load a parquet object from the file path, returning a.

Pandas Read File How to Read File Using Various Methods in Pandas?

Web reading parquet to pandas filenotfounderror ask question asked 1 year, 2 months ago modified 1 year, 2 months ago viewed 2k times 2 i have code as below and it runs fine. You can choose different parquet backends, and have the option of compression. I have a python script that: # import the pandas library as pd. You can.

How to read (view) Parquet file ? SuperOutlier

Web 4 answers sorted by: You can use duckdb for this. Polars was one of the fastest tools for converting data, and duckdb had low memory usage. Result = [] data = pd.read_parquet(file) for index in data.index: Index_colstr or list of str, optional, default:

pd.to_parquet Write Parquet Files in Pandas • datagy

# read the parquet file as dataframe. Parameters pathstring file path columnslist, default=none if not none, only these columns will be read from the file. It reads as a spark dataframe april_data = sc.read.parquet ('somepath/data.parquet… Parameters pathstr, path object, file. You can use duckdb for this.

pd.read_parquet Read Parquet Files in Pandas • datagy

Web this function writes the dataframe as a parquet file. This file is less than 10 mb. Web this is what will be used in the examples. It's an embedded rdbms similar to sqlite but with olap in mind. Refer to what is pandas in python to learn more about pandas.

Web Pandas.read_Parquet(Path, Engine='Auto', Columns=None, Storage_Options=None, Use_Nullable_Dtypes=_Nodefault.no_Default, Dtype_Backend=_Nodefault.no_Default, **Kwargs) [Source] #.

Pandas.read_parquet(path, engine='auto', columns=none, storage_options=none, use_nullable_dtypes=false, **kwargs) parameter path: Reads in a hdfs parquet file converts it to a pandas dataframe loops through specific columns and changes some values writes the dataframe back to a parquet file then the parquet file. # import the pandas library as pd. Web load a parquet object from the file path, returning a dataframe.

It Could Be The Fastest Way Especially For.

Web 1.install package pin install pandas pyarrow. Import duckdb conn = duckdb.connect (:memory:) # or a file name to persist the db # keep in mind this doesn't support partitioned datasets, # so you can only read. Web in this article, we covered two methods for reading partitioned parquet files in python: 12 hi you could use pandas and read parquet from stream.

This File Is Less Than 10 Mb.

Refer to what is pandas in python to learn more about pandas. It's an embedded rdbms similar to sqlite but with olap in mind. Load a parquet object from the file. It colud be very helpful for small data set, sprak session is not required here.

It Reads As A Spark Dataframe April_Data = Sc.read.parquet ('Somepath/Data.parquet…

Load a parquet object from the file path, returning a geodataframe. # get the date data file. Web this function writes the dataframe as a parquet file. Web the read_parquet method is used to load a parquet file to a data frame.